Installing NVIDIA GPU Drivers On Oracle Cloud

Fabio Augusto Miranda Martins

on 22 April 2020

Tags: cloud-init , GPGPU , GPU , Oracle

In recent reports, it is stated that datacenter-based GPU deployments is the fastest sector, and again, that’s no surprise. The cloud has had its own incredible growth over the years, and it’s only natural that these two technologies are starting to work in harmony. As a matter of fact, most public clouds have GPU offerings, which leads us to the meat of this blog post: Oracle Cloud.

It’s no secret that GPGPUs (general purpose GPU) have been on the rise in the past five years. With cryptocurrency blossoming, AI/ML blooming, and heavy-duty simulations still going strong, the need for a GPU is only increasing. With advances in computer vision, AR/VR and other resource-intensive applications on the horizon, the trend shows no sign of slowing either.

Oracle

I’m proud to be writing about a new feature that the Canonical team has been working on – NVIDIA GPU driver installation made easy for clouds. Previously, installing NVIDIA GPU drivers was a very manual process. You had to figure out your kernel version, and drivers, and then search through package archives to find the right package. Now, you can automate this entire process on first boot.

Let’s go dive in, shall we?

(Note: I will show examples using the Oracle CLI, but you can do this through the oracle web console as well.)

Launching a new instance

Using the CLI, let’s take a look at the command used to launch an instance

oci compute instance launch --shape VM.GPU2.1 --availability-domain "qIZq:US-ASHBURN-AD-1" --compartment-id <component> --assign-public-ip true --subnet-id <subnet id> --image-id "ocid1.image.oc1.iad.aaaaaaaakdybjqysepqx2iwne24uxdx4apzdcn2ll7kd66a52fgs7w4mz3vq" --ssh-authorized-keys-file <ssh-key-file>

I’ve elided some of my setup details from the command I’m using, feel free to plug in your own command arguments if you’d like to follow along.

Here’s some of the key details:

- Shape: I need an instance that has GPUS. VM.GPU2.1 has a NVIDIA Tesla P100 attached, which should do just fine for this purpose.

- Availability-Domain: I’m in qIZq:US-ASHBURN-AD-1. It doesn’t matter too much where your availability domain is (provided you can launch GPU instances there), but you do need to know for the next steps.

- Compartment ID: You can find your compartment by going to the https://console.us-ashburn-1.oraclecloud.com/identity/compartments (you may need a different region than us-ashburn-1), and finding the OCID of your compartment.

- Subnet ID: You can find this in the Oracle Cloud Console by going to Networking -> Virtual Cloud Networks, selecting your network, selecting the subnet you wish to use, and then copying the OCID from there.

- Image ID: The image ID I picked is Ubuntu 18.04. Note that this is just an off-the-shelf Ubuntu Image; there are no special GPU drivers pre-linked into it. You don’t have to pick any special GPU-enabled image. I found this image by going to https://docs.cloud.oracle.com/iaas/images/ubuntu-1804/, clicking on a suitable image (Minimal if you want a stripped-down, streamlined version; in my case I just went with the non-minimal offering), and then finding the right OCID for the right availability domain

- Cloud-init.cloud-config: Cloud-init lets us set tons of parameters to influence first boot (and subsequent boots, too!). Check out all the things it can do here. We’re going to use it to showcase a new feature: NVIDIA driver installation.

However, if you were to launch a GPU-enabled instance, you still have to figure out what version of NVIDIA GPU drivers to use, and install them. This is not always straightforward, as the driver version depends on the kernel you have installed and the type of GPUs attached to the instance. Thankfully, cloud-init has a feature that streamlines this for us.

First, let’s add the following to my command above:

oci compute instance launch --shape VM.GPU2.1 --availability-domain "qIZq:US-ASHBURN-AD-1" --compartment-id <component> --assign-public-ip true --subnet-id <subnet id> --image-id "ocid1.image.oc1.iad.aaaaaaaa7bcrfylytqnbsqcd6jwhp2o4m6wj4lxufo3bmijnkdbfr37wu6oa" --user-data-file cloud-init.cloud-config --ssh-authorized-keys-file <ssh-key-file>

Take note of the new command line option for user-data-file. This allows us to specify a cloud-init configuration file to influence behavior at first boot (and subsequent boots too! Check out more of what cloud-init does here). If I wanted to get NVIDIA GPU drivers installed automatically, here is all of what I would need in my cloud-init.cloud-config.

Let’s take a look at the feature at its most basic. If I open up cloud-init.cloud-config, I have the following contents in my file

#cloud-config

drivers:

nvidia:

license-accepted: trueUnder The Hood Of Cloud-init

This is all that’s needed for NVIDIA driver installation. This simple snippet of config will do the following:

- Installs the ubuntu-drivers-common package

- Run the ubuntu-drivers command that will:

- Detect which kernel you are using (in this case linux-oracle)

- Detect NVIDIA hardware installed in the system

- Select the latest driver that satisfies your kernel and NVIDIA hardware

- Install that driver and all dependencies for immediate use

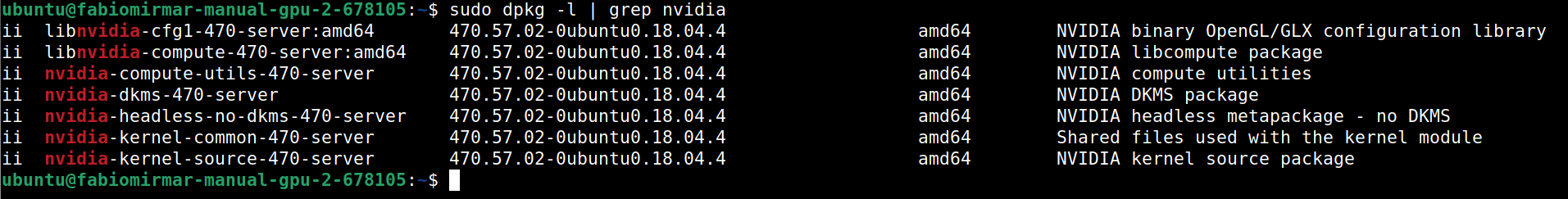

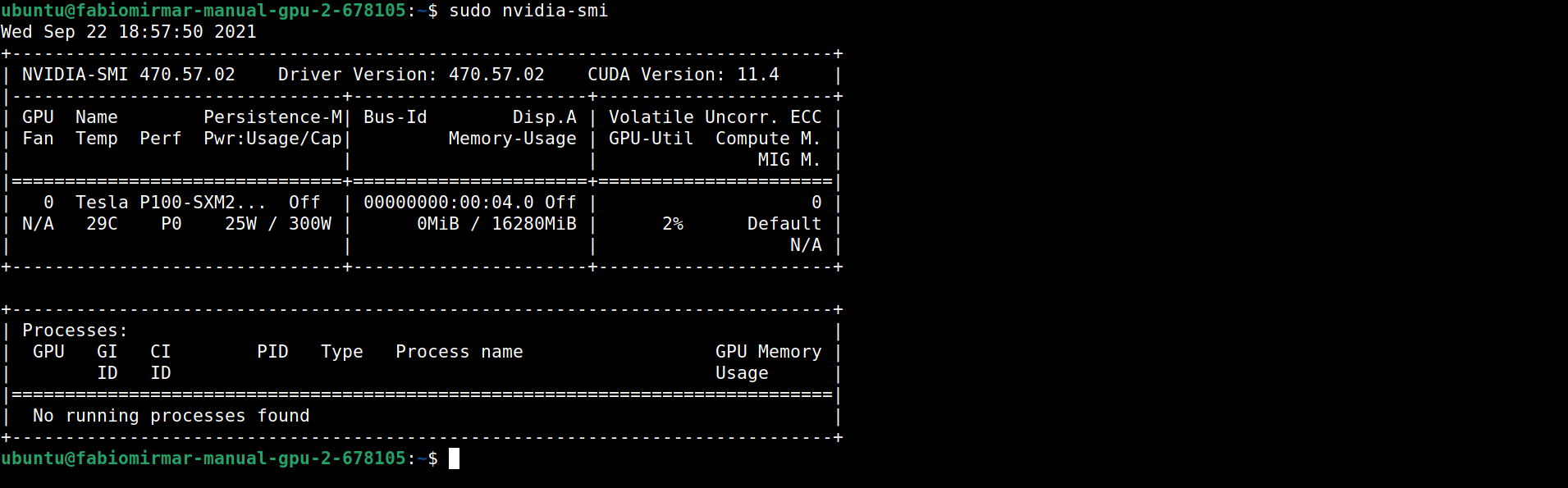

We can verify that the driver is properly installed by apt-installing the equivalent nvidia-utils package. Note that you have to install the nvidia-utils package matching the version and type of the driver that was just installed by the ubuntu-drivers command executed by cloud-init. Please note that this tool will install the latest matching driver in our archives, so your version may differ from mine.)

There are two types of NVIDIA drivers:

- UDA (Unified Driver Architecture) drivers: recommended for the generic desktop use

- ERD (Enterprise Ready Drivers) – which are recommended on servers, and for computing tasks. Their packages can be recognized by the “-server” suffix.

As an example, at the time of writing this blog post, version 470 ERD driver (-server) was installed:

With the version and type in mind, you can list the nvidia-utils available packages with the “apt-cache search nvidia-utils” command, and in this case, the package I’m looking for is nvidia-utils-470-server. After installing this package (apt install nvidia-utils-470-server) you should be able to use the nvidia-smi tool, as in the example below:

Our First Workload

Now that we have a driver installed, let’s test it out with a quick program utilizing CUDA. First, we’ll install a CUDA toolkit through apt (apt install nvidia-cuda-toolkit). From here, we can start compiling CUDA programs. Let’s tackle a “Hello World” application by implementing a quick “Vector Add” grabbed from a CUDA tutorial on the NVIDIA Dev Blog.

#include <iostream>

#include <math.h>

// Kernel function to add the elements of two arrays

__global__ void add(int n, float *x, float *y)

{

for (int i = 0; i < n; i++)

y[i] = x[i] + y[i];

}

int main(void)

{

int N = 1<<20;

float *x, *y;

// Allocate Unified Memory – accessible from CPU or GPU

cudaMallocManaged(&x, N*sizeof(float));

cudaMallocManaged(&y, N*sizeof(float));

// initialize x and y arrays on the host

for (int i = 0; i < N; i++) {

x[i] = 1.0f;

y[i] = 2.0f;

}

// Run kernel on 1M elements on the GPU

add<<<1, 1>>>(N, x, y);

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize();

// Check for errors (all values should be 3.0f)

float maxError = 0.0f;

for (int i = 0; i < N; i++)

maxError = fmax(maxError, fabs(y[i]-3.0f));

std::cout << "Max error: " << maxError << std::endl;

// Free memory

cudaFree(x);

cudaFree(y);

return 0;

}

We can name this file ‘vector_add.cu’ and compile it with:

nvcc vector_add.cu -o nvidia_demo

If everything works correctly, you should be able to run `./nvidia_demo` and see the output of

“Max error: 0”

If you do, celebrate, because you just ran your first workload on a GPU-enabled Oracle Cloud instance (and hopefully thought it was easy too!)

Off The Beaten Path

An Easier Way To Install Drivers On Existing Instances

This was great for launching new instances, but what do we do if we want the same experience on instances that have already been launched? Well, don’t fear, that’s just as easy. There’s a new command that makes it just as simple to install NVIDIA GPGPU drivers on existing instances.

You can get the correct NVIDIA drivers by issuing the following commands:

$ apt install ubuntu-drivers-common

$ ubuntu-drivers install --gpgpu

As described previously, one important thing to note: when you install with the “–gpgpu” option, this will install the server flavor of the NVIDIA drivers. If you’d like to use nvidia-smi to check the status of these drivers, you will need to install the server version of nvidia-utils. So in the above example, you would need to install nvidia-utils-470-server instead of nvidia-utils-470. If you have a mismatch between the server and non-server versions, nvidia-smi will fail to communicate with your drivers. You can look at the output of “dpkg -l | grep nvidia” to check for any mismatches.

Keep in mind that instead of letting the ubuntu-drivers tool decide the NVIDIA driver version to install, you can explicitly tell it to use a specific version, as in the examples below (you may also refer to the Nvidia Drivers Installation wiki for more options):

$ sudo ubuntu-drivers install --gpgpu 450-server

<OR>

$ sudo ubuntu-drivers install --gpgpu nvidia:450-server

Note that NVIDIA A100 GPUs (provided in certain Oracle instance shapes, such as BM.GPU4.8) will also require installing fabricmanager, which you can accomplish with “apt install cuda-drivers-fabricmanager-<version>”, which must also match the NVIDIA driver version that you are using.

Using This Feature On Other Clouds

We’ve been demonstrating this on the Oracle Cloud so far, but cloud-init is a library that works across multiple clouds. Be on the lookout for future blog posts talking about other clouds utilizing this feature. One thing to note is that our Oracle Cloud images have some special tweaks in them to get the NVIDIA drivers working seamlessly.

If you are trying this feature out on other clouds, you may need to blacklist the Nouveau drivers. This is pretty well documented in the CUDA Installation Guide For Linux

Wrap-up

So there you have it. This method of installation should help eliminate the fussy bits of NVIDIA driver installation. Whether using cloud-init or ubuntu-drivers-common, your journey to GPU workloads in the cloud should be significantly shorter moving forward. So go give it a shot, and let me know what you think.

Talk to us today

Interested in running Ubuntu in your organisation?

Newsletter signup

Related posts

Edge AI in a 5G world – part 4: How your business can benefit from ‘smart cell towers’

This is part of a blog series on the impact that 5G and GPUs at the edge will have on the roll out of new AI solutions. You can read the other posts here....

Edge AI in a 5G world – part 3: Why ‘smart cell towers’ matter to AI

This is part of a blog series on the impact that 5G and GPUs at the edge will have on the roll out of new AI solutions. You can read the other posts here....

Edge AI in a 5G world – part 2: Why make cell towers smart?

This is part of a blog series on the impact that 5G and GPUs at the edge will have on the roll out of new AI solutions. You can read the other posts here....